Alright, let's get straight in and understand how exactly the internal implementation of HashMaps is done in Java.

Not going to bore you with yet another explanation of what hashing is and what hash maps are since that's not the primary focus here. This article aims at explaining how the functionalities of HashMaps are implemented under the hood for those of you who have been using them for a long time but never really bothered to see how it actually works.

End Goal

So what do we ultimately expect the HashMap to do for us?

Needs to store the keys based on their hash value [Hash function]

Needs to take care of collisions when two different keys end up giving the same hash value

Needs to be able to dynamically resize efficiently

Let's try visiting each of these objectives in detail and take a peek into how they are achieved.

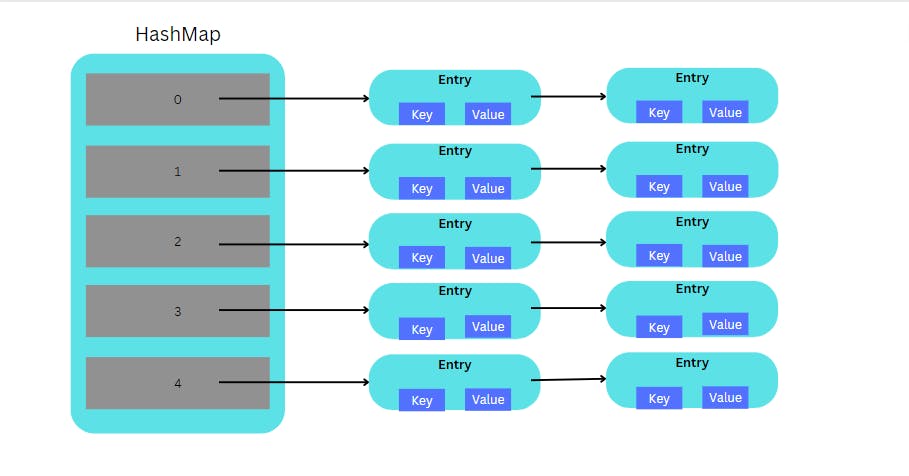

Before that, this is the basic Linked List that's going to hold each entry. Nothing major here. We obviously need a key, a value and the next pointer to point to the next entry with the same index in the hashmap.

public class Entry<K, V> {

private K key;

private V value;

private Entry<K, V> next;

Entry(K key, V value, Entry<K, V> next) {

this.key = key;

this.value = value;

this.next = next;

}

public K getKey() { return key; }

public void setKey(K key) {

this.key = key;

}

public V getValue() {

return value;

}

public void setValue(V value) {

this.value = value;

}

public Entry<K, V> getNext() {

return next;

}

public void setNext(Entry<K, V> next) {

this.next = next;

}

}

Java HashMap Terminologies

Default Capacity - The default size of the Java HashMap is initialized to 16 and is always defined as a power of 2 upto 2³⁰. This can be explicitly specified while creating the HashMap using the parameterized constructor.

Load Factor - Now this is basically used to control at what point exactly should the HashMap be resized. The threshold is determined by (Default Capacity * Load Factor).

The default value for the load factor is 0.75 so for the default scenario, the HashMap would be resized when there are (16*0.75 = 12) entries.

[Pro Tip: If you are sure you would be using a lot of entries, it's always better to initialize the HashMap using the parameterized constructor by explicitly passing the custom size to it so that you don't have to waste time resizing everytime]

Now let's revisit our basic objectives that we defined earlier

Effective Hash Generation

Now, if you are familiar with Java and have used HashMaps with custom objects as keys, you would know that you need to define the hashcode() function for your custom class. Similarly, for other built-in objects like Strings we have an hashcode method predefined to use.

Now let's think about hashcodes that come from user-defined objects. We cannot always guarantee that they would be uniformly spread. What I mean by this is that let's say we have a Car class and we have the hashcode method overridden so that the hashcode is generated as the (car number)*(manufacturer ID). Now if the manufacture ID happens to be even, then all the resulting products end up being even. This would just result in a HashMap where the odd places are sitting freely and the even places are just facing a lot of collisions which is super ineffective.

So the brilliant folks at Java added an extra hash method that generates an effective hashcode from the already implemented hashcode of the object which ensures that the hashcodes are uniformly distributed.

Now that we have ensured the uniform distribution, there is one more thing to consider and that's the lower significant bits of the generated hash codes.

So let's consider the default hashmap of size 16. Its indices are going to be as follows:

0000 - 0 1000 - 8

0001 - 1 1001 - 9

0010 - 2 1010 - 10

0011 - 3 1011 - 11

0100 - 4 1100 - 12

0101 - 5 1101 - 13

0110 - 6 1110 - 14

0111 - 7 1111 - 15

What we do to map our large hashcode values that look like 1100001111010011100110101010001 to proper index values in our hash table is this function:

int getIndex(int hash, int capacity) {

return hash & (capacity - 1);

}

What it does is the retaining of the lower significant bits alone that can be used as an index in our hash table.

1100001111010011100110101010001 & 1111([(capacity-1=15) in binary]) -> 0001

Now let's say we have a fairly uniform distributed hashing strategy and then we get the below hashcodes

110000111101001110011010101**0001**

110100000100011110011010101**0001**

101010101010101000111001011**0001**

What you see here is that the lower significant digits of all three hashcodes are 0001 which would mean all these would be saved at index 1 of the hash table and that is not desired.

Therefore, the below hash code takes care of 2 things:

Uniform distribution of the hashcodes so that there is a good variation between 2 different hashcodes

Considerable variance of bits especially among the lower significant bits

int hash(int hashcode) {

hashcode ^= (hashcode >>> 20) ^ (hashcode >>> 12);

return hashcode ^ (hashcode >>> 7) ^ (hashcode >>> 4);

}

Lot of random fancy magical numbers?! Well yeah that's something which is always under constant research to optimize things better. Essentially all we have to understand is that it helps achieve our primary objective of effectively generating indices from hashcodes.

Managing Collisions

Now things should be fairly clear. How do we manage collisions?

Yes by using the linked list data structure and adding the newer elements to the linked list in the case of collisions.

Let's see a rough implementation of the put and get methods of the hashmap respectively to better understand.

I've commented the code fairly well so that it's easy to read and pretty self-explanatory.

public void put(K key, V value) {

// Generation of the index (both the methods have been explained)

int hash = hash(key.hashCode());

int index = getIndex(hash, capacity);

Entry entry = new Entry(key, value, null);

// If there is no value already present at that index, then just insert the new entry there

if (table[index] == null) {

table[index] = entry;

}

// In case of a value already being present at the particular index

else {

Entry currentEntry = table[index];

Entry previousEntry = null;

// Iterate through the Linked List

while(currentEntry != null) {

// If there exists a node with the key already present, then replace its value

if (currentEntry.getKey().equals(hash)) {

currentEntry.setValue(value);

return;

}

previousEntry = currentEntry;

currentEntry = currentEntry.getNext();

}

// If key wasn't present then this would be the last occupied node so set the new entry as its next node

if (previousEntry != null) {

previousEntry.setNext(entry);

}

}

}

public V get(K key) {

// Generation of the index

int hash = hash(key.hashCode());

int index = getIndex(hash, capacity);

// If no element is present at the index return null

if (table[index] == null) {

return null;

} else {

// Iterate over the linked list and return if the key matches

Entry current = table[index];

while(current != null) {

if (current.getKey() == key) {

return (V) current.getValue();

}

current = current.getNext();

}

return null;

}

}

Resizing of the HashMap

As explained earlier, we make use of the load factor to determine when we really want to start resizing. When the threshold (load factor*capacity) is reached then the hash map is resized to double the initial capacity.

Here's a basic implementation of the resize method which can be called from the put method whenever the size exceeds the threshold

private void resize(int newCapacity) {

Entry[] old = table;

Entry[] newTable = new Entry[newCapacity];

for(int i=0;i<old.length;i++) {

Entry e = old[i];

if (e != null) {

old[i] = null;

do {

Entry next = e.getNext();

int index = getIndex(next.hashCode(), newCapacity);

e.setNext(newTable[index]);

newTable[index] = e;

e = next;

} while(e != null);

}

}

}

Conclusion

So to summarize, we saw how the functionalities of the HashMap Collection is implemented under the hood and can now make sense of the various features are achieved. It would fairly feel comfortable to go through the actual Java source code after having read this article. This is where I was stuck and finally decided to get my hands dirty and implement it completely on my own (github: WILT/Day 1 at main · jivjen/WILT (github.com)) and yeah that's What I Learnt Today